Aria:首个开放多模态原生 MoE 模型

Rhymes AI 自豪地推出 Aria,世界上第一个开源、多模态原生专家混合(MoE)模型。

简而言之,Aria 的特点是:

多模态原生理解

- 在广泛的多模态和语言任务上表现出最先进的性能

- 从零开始在多模态和语言数据混合上进行预训练

轻量且快速

- 细粒度专家混合模型,每个 token 激活 3.9B 参数

- 高效且信息丰富的可变图像尺寸和纵横比视觉编码

长上下文窗口

- 64K token 的长多模态上下文窗口,10 秒内为 256 帧视频生成字幕

开放

多模态原生性能

Aria 可同时处理文本、图像、视频和代码,无需为每种类型进行单独设置,展示了多模态原生模型的优势。

我们为术语 多模态原生 提供了一个可量化的定义:

多模态原生模型是指一个单一模型,在多种输入模态(例如文本、代码、图像、视频)上具有强大的理解能力,并且其能力与类似容量的模态专用模型相当或超越。

我们将 Aria 与已建立的基准测试中最佳的开放和封闭多模态原生模型进行了比较,强调了以下关键观察结果:

- 同类最佳性能: Aria 是领先的多模态原生模型,在多模态、语言和编码任务上,相较于 Pixtral-12B 和 Llama3.2-11B 表现出明显优势。

- 与专有模型竞争: Aria 在多模态任务上与 GPT-4o 和 Gemini-1.5 等专有模型表现出竞争力,包括文档理解、图表阅读、场景文本识别和视频理解。

- 参数效率: Aria 是参数效率最高的开放模型。得益于 MoE 框架,Aria 仅激活 3.9 亿参数,而 Pixtral-12B 和 Llama3.2-11B 等模型则完全激活。

长多模态输入理解

多模态数据通常很复杂,涉及结合视觉和文本的长序列,例如带字幕的视频或长文档。为了使模型在实际应用中有效,它必须能够高效地理解和处理此类数据。

Aria 在这方面表现出色,展示了卓越的长多模态输入理解能力。它超越了更大的开放模型,证明了其尽管尺寸较小,但仍具有效率和有效性。与专有模型相比,Aria 在长视频理解方面超越了 GPT-4o mini,在长文档理解方面超越了 Gemini-1.5-Flash。这使得 Aria 成为以计算和时间高效的方式处理大量多模态数据的首选,在实际场景中提供更快、更准确的结果。

指令遵循

Aria 在理解和遵循多模态和语言输入指令方面非常有效,在 MIA-Bench 和 MT-Bench 上都优于顶级的开源模型。

多模态原生训练

Aria 通过 4 阶段训练流程从零开始预训练,确保模型在逐步学习新能力的同时保留先前习得的知识。

1. 语言预训练

- 使用包含编程代码的大型精选语言数据集预训练 MoE 解码器。

- 利用下一 token 预测损失使 MoE 学习有关世界的通用知识。

- 消耗 6.4T 文本 token。

2. 多模态预训练

- 使用语言和多模态数据的混合预训练 MoE 解码器和视觉编码器。

- 赋予模型广泛的多模态理解能力,同时保持或增强其语言理解能力。

- 数据集包括:

- 1T 高质量语言 token。

- 来自各种来源的 400B 多模态 token,例如:

- 来自 common crawl 的交错图像-文本序列。

- 合成图像字幕。

- 文档转录和问答对。

- 合成视频字幕和问答对。

- 严格的数据筛选和过滤程序确保数据质量和安全性。

3. 多模态长上下文预训练

- 将模型的上下文窗口扩展到 64K token。

- 处理 12B 文本 token 和 21B 多模态 token,其中 33B token 中有 69% 是长序列。

4. 多模态后训练

- 退火学习率以帮助模型收敛。

- 提高模型的问答和指令遵循能力。

- 消耗 20B 高质量数据 token。

定性示例

结合视觉、语言和编码能力的多模态原生推理

提示

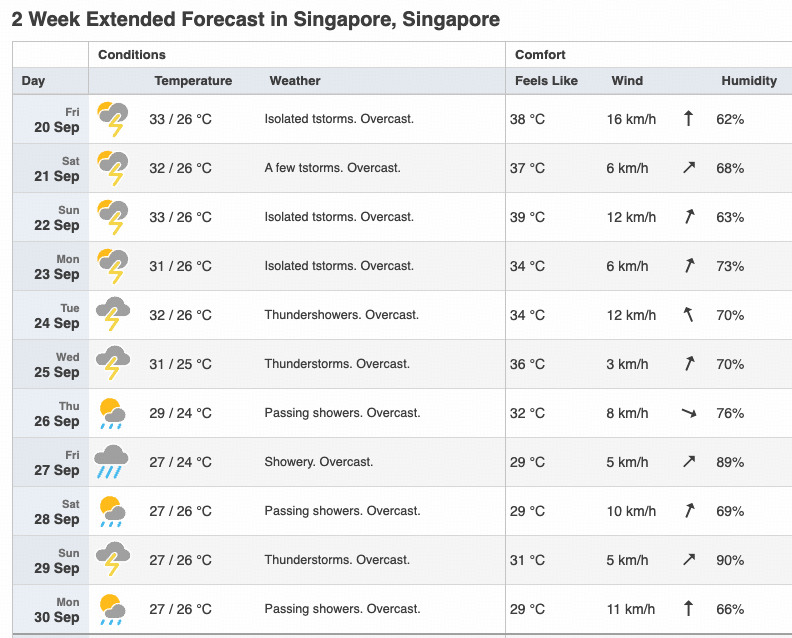

Organize the highest temperature, lowest temperature, and humidity for all even dates into a table.

Write a python code to draw a line chart for the temperatures, and a bar chart for the humidity. Use red and green colors for the temperatures, and use blue color for the humidity.

(来源:https://www.timeanddate.com/weather/singapore/singapore/hourly)

(来源:https://www.timeanddate.com/weather/singapore/singapore/hourly)

Aria 回复

Sure! The table of temperature, humidity for even dates is as below:

| Date | Highest Temperature | Lowest Temperature | Humidity |

|---------|---------------------|--------------------|----------|

| 20 Sep | 33 °C | 26 °C | 62% |

| 22 Sep | 33 °C | 26 °C | 63% |

| 24 Sep | 32 °C | 26 °C | 70% |

| 26 Sep | 29 °C | 24 °C | 76% |

| 28 Sep | 27 °C | 26 °C | 69% |

| 30 Sep | 27 °C | 26 °C | 66% |

The Python code to draw the line chart for temperatures and the bar chart for humidity is as follows:

import matplotlib.pyplot as plt

# Data for even dates

days = ['20 Sep', '22 Sep', '24 Sep', '26 Sep', '28 Sep', '30 Sep']

high_temps = [33, 33, 32, 29, 27, 27]

low_temps = [26, 26, 26, 24, 26, 26]

humidity = [62, 63, 70, 76, 69, 66]

# Line chart for temperatures

plt.figure(figsize=(14, 6))

plt.subplot(1, 2, 1)

plt.plot(days, high_temps, 'r-', label='Highest Temperature', marker='o')

plt.plot(days, low_temps, 'g-', label='Lowest Temperature', marker='o')

plt.xlabel('Days')

plt.ylabel('Temperature (°C)')

plt.title('Temperature Variation')

plt.legend()

plt.grid(True)

# Bar chart for humidity

plt.subplot(1, 2, 2)

plt.bar(days, humidity, color='blue', alpha=0.7)

plt.xlabel('Days')

plt.ylabel('Humidity (%)')

plt.title('Humidity')

plt.ylim(0, 100)

plt.grid(axis='y')

plt.tight_layout()

plt.show()

使用 Aria 提供的代码片段,我们可以生成以下可视化:

多模态原生推理 - 财务报告分析

提示

How does the company's profit margin change from year 2020 to 2021? What factors cause such changes? Answer step by step.

来源:https://www.financestrategists.com/wealth-management/financial-statements/

来源:https://www.financestrategists.com/wealth-management/financial-statements/

Aria 回复

To determine how the company’s profit margin changed from 2020 to 2021, we need to follow these steps:

#### 1. Calculate the profit margins for both years:

- **2020**:

- Net income: $57,411 million

- Total net sales: $274,515 million

- Profit margin = (Net income / Total net sales) * 100 = (57,411 / 274,515) * 100 ≈ 20.91%

- **2021**:

- Net income: $94,680 million

- Total net sales: $365,817 million

- Profit margin = (Net income / Total net sales) * 100 = (94,680 / 365,817) * 100 ≈ 25.88%

#### 2. Analyze the factors causing the change:

- **Increase in Net Income**: The net income increased from $57,411 million in 2020 to $94,680 million in 2021, showing a significant rise of $37,269 million.

- **Sales Growth**: Total net sales also increased from $274,515 million in 2020 to $365,817 million in 2021, indicating a strong growth in revenue.

- **Cost Management**: The cost of sales increased from $151,286 million in 2020 to $192,266 million in 2021, but the percentage increase in sales outpaced the increase in costs, leading to a higher profit margin.

- **Operating Expenses**: Operating expenses grew from $38,668 million in 2020 to $43,887 million in 2021, but the rise in sales was more substantial, positively impacting the profit margin.

Therefore, the company's profit margin improved from approximately 20.91% in 2020 to approximately 25.88% in 2021. This improvement is primarily due to a substantial increase in net income and effective cost management relative to the growth in total net sales.

长多模态上下文窗口

提示

Based on the five species in the images, draw a food chain. Explain the role of each species in the food chain.

Aria 回复

1. **Mushrooms (Image 1)**: Decomposers, breaking down dead organic matter and recycling nutrients.

2. **Grass (Image 4)**: Primary producers, converting sunlight into energy via photosynthesis.

3. **Grasshopper (Image 2)**: Primary consumers, herbivores feeding on plants.

4. **Mouse (Image 3)**: Secondary consumers, herbivores that eat plants and possibly small insects.

5. **Hawk (Image 5)**: Tertiary consumers, predators that eat mice and other small animals.

### Food Chain:

Grass → Grasshopper → Mouse → Hawk → Mushrooms

### Explanation:

- Grass serves as the base, providing energy to grasshoppers.

- Grasshoppers eat the grass and are then consumed by mice.

- Mice are preyed upon by hawks.

- Hawks are apex predators, feeding on mice.

- Mushrooms decompose dead matter, recycling nutrients back into the ecosystem.

长多模态输入 - 论文阅读

提示

Summarize the key contribution of the paper, and explain how it uses various figures and tables to showcase such contribution.

完整论文:https://arxiv.org/abs/2407.15754

完整论文:https://arxiv.org/abs/2407.15754

Aria 回复

The paper introduces LONGVIDEOBENCH, a benchmark for evaluating Large Multimodal Models (LMMs) in long-context video-language understanding. It addresses the challenge of processing longer video inputs by proposing a question-answering benchmark that tests the models' ability to understand and reason about video contexts interleaved with up to an hour-long video. This benchmark includes 3,763 videos with diverse themes and 6,678 human-annotated multiple-choice questions across 17 categories.

The key contribution is the comprehensive evaluation of LMMs' performance on long-form video understanding, highlighting the limitations of existing models in handling extended inputs. The paper demonstrates that while proprietary models like GPT-4 and Gemini-1.5-Pro show improvements with longer inputs, open-source models lag significantly behind.

Figures and tables are used to illustrate these points:

**Figure 1:** Demonstrates the performance of different models on the benchmark, showing how accuracy varies with the number of frames.

**Figure 2:** Provides examples of the 17 categories of referring reasoning questions.

**Table 1:** Lists the benchmark and previous benchmarks for comparison.

**Table 2:** Details the 17 categories of referring reasoning questions.

**Table 3:** Shows statistics of videos in LONGVIDEOBENCH by category groups and duration.

**Table 5:** Validation set results categorized by duration groups.

**Table 7:** Test set leaderboard of LMMs by duration groups and question categories.

These visual aids help in understanding the performance metrics, the diversity of the dataset, and the specific challenges posed by longer videos and complex questions, thus emphasizing the need for improved models in long-form video understanding.

长多模态输入 - 分割视频并编写场景描述

提示

Please split this video into scenes, providing start time, end time, a title and detailed descriptions for each scene.

14 分钟视频的截图,来源:https://www.youtube.com/watch?v=Gh22bt1GYoQ

14 分钟视频的截图,来源:https://www.youtube.com/watch?v=Gh22bt1GYoQ

Aria 的回应,结果已截断,在此处查看完整的 12 个场景描述

Certainly! Here are the scenes from the video, including their start and end times, titles, and detailed descriptions:

### Scene 1: Introduction

- **Start Time**: 00:01

- **End Time**: 00:13

- **Title**: Introduction

- **Description**: The video opens in a modern kitchen where an individual in a black shirt and apron stands behind a counter. The kitchen is well-organized with various spices and knives visible. The person appears to be preparing to demonstrate a cooking process.

---

### Scene 2: Cooking Demonstration

- **Start Time**: 00:16

- **End Time**: 00:29

- **Title**: Cooking Demonstration

- **Description**: The focus shifts to a close-up of a plate with sliced meat and a yellow sauce. A spoon is used to serve the meat, emphasizing the cooking process.

The video has a total of 12 scenes, each with a specific focus and detailed description.

模型架构

Aria 由一个视觉编码器和一个专家混合解码器组成。视觉编码器以其原始宽高比为图像/视频生成视觉 token。它以三种分辨率模式运行:中等、高和超高。中等分辨率图像使用 128 个视觉 token 表示;高分辨率图像使用 256 个视觉 token 表示;超高分辨率图像被动态分解为多个高分辨率子图像。

MoE 解码器是多模态原生的,并根据语言和视觉输入 token 进行预测。具体来说,Aria 的每个 MoE 层都有 66 个专家,其中 2 个专家在所有输入之间共享以捕获通用知识,而路由器模块为每个 token 激活另外 6 个专家。

使用 Aria 进行开发

Aria 旨在方便开发者使用,提供广泛的支持和灵活性。为了促进开发和协作,Rhymes AI 提供了一个代码库,方便将 Aria 应用于下游应用程序。

该代码库具有以下特点:

- 使用 Transformers 或 vllm 进行快速简单的推理

- 使用 Aria 的烹饪书和最佳实践

- 使用单个 GPU 即可在各种数据集格式上微调 Aria

展望未来

随着 Aria 的推出,我们的目标是重新定义 AI 如何融入我们的生活。我们专注于创建真正造福于人们的工具,扩展技术和人际连接的可能性。

我们很高兴能与研究人员、开发者和创新组织合作,共同探索 AI 的新领域。我们可以共同构建不仅尖端而且对社会有影响力和意义的解决方案。

我们相信 AI 的未来是多模态、高效且无缝集成到日常体验中的。Aria 仅仅是个开始。让我们共同塑造 AI 的未来。

如有任何疑问或讨论,请随时加入我们的 Discord 社区或通过我们的 反馈表 联系我们。

Rhymes 团队