从对话中提炼:在大型语言模型互动中寻找意义

在大型语言模型 (LLM) 时代,与 AI 进行对话变得越来越普遍。然而,随着这些交互变得越来越长和复杂,跟踪关键信息和洞察可能会成为一个挑战。这就是为什么需要一个个性化的对话摘要工具,帮助用户提炼 LLM 对话的精髓并保留有价值的知识。

这个名为自适应/渐进式摘要的项目,旨在通过提供一种独特的方法来解决 LLM 对话摘要的挑战。与通用摘要方法不同,我们的项目专注于创建反映个人用户互动和需求的个性化摘要。通过为每个用户量身定制摘要过程,我们确保生成的摘要不仅准确,而且与个人相关且有意义。

我们项目的一个主要特点是渐进式摘要技术。我们不是为每次交互生成一个全新的摘要,而是根据最新的对话来完善和增强现有摘要。这种方法确保了一致性,避免了摘要不连贯的问题。通过在之前的摘要基础上逐步构建,我们创建了一个全面且连贯的 LLM 交互记录。

该项目是与 Sayak Paul 在 2025 年初举行的 Vertex AI Sprint 期间合作完成的。

案例研究

该项目的核心是提示工程的关键作用。通过精心设计提示,引导 LLM 生成准确和相关的摘要,我们可以显著提高最终输出的质量。这涉及到理解 LLM 行为的细微差别,并采用有效的提示设计技术来引发所需的响应。

## System Prompt

Based on the given summary and the last conversation between you (assistant) and me (user),

update the summary following the below summary guide.

Summary Guide:

* Do not rewrite the entire summary.

* It is allowed to modify the current summary, but do not eliminate.

* Update only the specific portions necessary to reflect new information or changes.

* Only include information we have explicitly discussed.

* Do not introduce any new information or topics, even if you have prior knowledge.

* Ensure the summary is factually accurate and reflects the nuances of our discussion.

* While being detailed, also aim for conciseness and clarity in the summary.

* Use markdown formatting to make the summary more readable (using bullet points, numbers are preferred).

* Do not seprate sections for previous and updated summaries.

为了说明我们摘要技术的渐进性,让我们看一系列屏幕截图,展示摘要如何随着对话的进行而演变。这些屏幕截图是我们自己在实验本项目时在论文 s1: Simple test-time scaling 中截取的。此外,我们使用了 Gemini 2.0 Flash 进行测试。

第一次摘要:这篇论文是关于什么的?

我想要了解这篇论文的表面内容,所以我简单地问了“这篇论文是关于什么的?”,然后模型给出了回应,下面是第一次交互的摘要。在阅读完整篇论文后,我发现这份摘要写得很好,而且足够详细。

The paper "s1: Simple test-time scaling" introduces a straightforward approach to improve language model reasoning performance by using additional computation during the testing phase.

Here's a breakdown:

* **Problem:** The paper tackles the challenge of enhancing reasoning capabilities in language models (LMs) with a focus on simplicity and replicability. It notes that while recent models like OpenAI's o1 demonstrate improved performance through test-time scaling (increasing computation at test time), the methodology isn't public.

* **Approach:** The authors propose a simple method based on:

1. **Data Curation:** They create a small dataset (s1K) of 1,000 reasoning questions with associated traces, meticulously selected based on difficulty, diversity, and quality.

2. **Budget Forcing:** A technique to control the computational resources used by the LM during testing. It forcefully terminates or extends the model's reasoning process. The 'Budget Forcing' approach consists of interrupting a model that generates too many thinking tokens or prolonging its thinking duration by appending "Wait" to the model's generation when it wants to stop.

* **Methodology:**

* Supervised fine-tuning of the Qwen2.5-32B-Instruct model on the s1K dataset.

* Applying "budget forcing" to the fine-tuned model during testing to control computation.

* **Results:**

* The resulting model (s1-32B) exceeds the o1-preview model's performance on competition-level math questions (MATH and AIME24).

* Budget forcing allows for performance extrapolation beyond the model's baseline, enhancing its accuracy on AIME24.

* The authors emphasize that using just a thousand samples is enough to generate a strong model that can compete with large-scale closed source models when a 'Budget Forcing' strategy is applied.

* **Contributions:**

* A simple method for creating a sample-efficient reasoning dataset.

* A straightforward test-time scaling technique.

* A competitive language model called s1-32B.

* Ablation studies of dataset creation and test-time scaling methods.

* **Key Ideas:**

* Test-time scaling can significantly improve reasoning in LMs.

* Simple methods can be effective and efficient.

* Careful data curation is crucial for good performance.

* Budget forcing provides a controllable way to manage test-time compute.

In essence, the paper argues that strong reasoning performance and test-time scaling can be achieved through a combination of curated data and controlled test-time computation without complex reinforcement learning techniques.

第二次摘要:作者是如何收集“推理轨迹”的?

收集“推理轨迹”是一项琐碎的任务,因为没有主要的 LLM 服务提供 API 来收集它们,所以我很想知道他们从哪里获得了推理轨迹。下面是基于第二次交互更新的摘要。

值得注意的是,更新后的摘要是准确的,因为它提到了“Google Gemini Flash Thinking API”,与论文相比,并且只有处理推理轨迹的部分得到了更新,其余摘要完全没有受到影响。

.....

1. **Data Curation:** .... The reasoning traces were collected using the Google Gemini Flash Thinking API (Google, 2024). They generated a reasoning trace and solution for each question using this API.

.....

第三次摘要:s1-32B 模型是否经过微调以理解“wait”令牌?

在与LLM交互时,我能理解“Wait”令牌的作用,但我很好奇如何应用它。具体来说,我不知道是否必须微调我的本地模型才能理解Wait令牌的作用,或者它是否可以在不微调的情况下应用于所有模型。同样,只有相关部分得到了适当更新。

.....

2. **Budget Forcing:** ... The s1-32B model is fine-tuned to understand the "Wait" token as a signal to continue reasoning when it would normally stop, refining its reasoning and potentially correcting errors.

.....

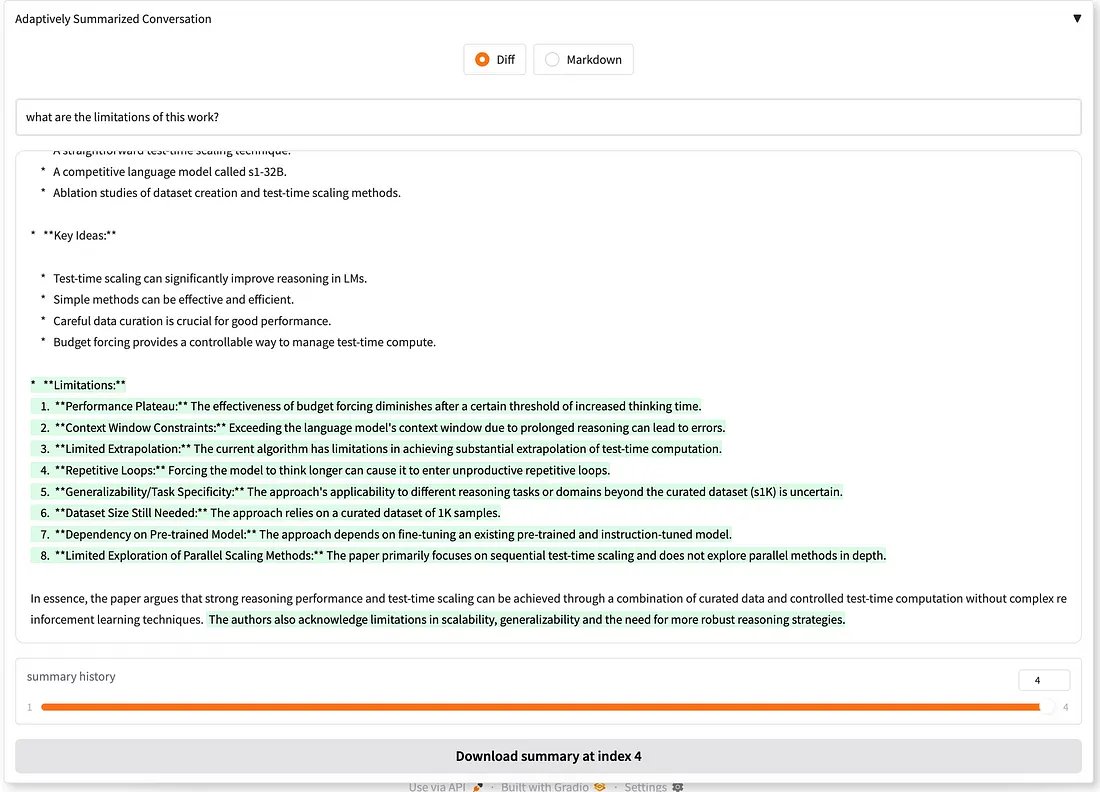

第四次摘要:这项工作的局限性是什么?

我理解了这篇论文的核心概念和机制,我想知道它的局限性。以下是在摘要末尾添加的局限性,没有影响其他部分,这使得这份摘要完美地闭合。

......

* **Limitations:**

1. **Performance Plateau:** The effectiveness of budget forcing diminishes after a certain threshold of increased thinking time.

2. **Context Window Constraints:** Exceeding the language model's context window due to prolonged reasoning can lead to errors.

3. **Limited Extrapolation:** The current algorithm has limitations in achieving substantial extrapolation of test-time computation.

4. **Repetitive Loops:** Forcing the model to think longer can cause it to enter unproductive repetitive loops.

5. **Generalizability/Task Specificity:** The approach's applicability to different reasoning tasks or domains beyond the curated dataset (s1K) is uncertain.

6. **Dataset Size Still Needed:** The approach relies on a curated dataset of 1K samples.

7. **Dependency on Pre-trained Model:** The approach depends on fine-tuning an existing pre-trained and instruction-tuned model.

8. **Limited Exploration of Parallel Scaling Methods:** The paper primarily focuses on sequential test-time scaling and does not explore parallel methods in depth.

...... The authors also acknowledge limitations in scalability, generalizability and the need for more robust reasoning strategies.

用户界面设计

为了更好地理解这种方法的有效性,跟踪摘要随时间的变化至关重要。为此,我们开发了一个简单而强大的基于 Gradio 的应用程序。

如您所见,在应用程序中,您可以浏览摘要历史并进行比较。绿色高亮文本表示与之前的摘要相比新增的内容,而红色文本表示删减的内容。此外,在长时间对话后,我们只想存储所学到的知识,这可以通过底部的“下载”按钮轻松完成。

我们已将此应用程序托管在 Hugging Face Space 上,欢迎您亲自尝试。此外,我们正在 GitHub 仓库中管理此项目的源代码。因此,请查看它们,并让我们了解您对此方法的任何意见。

致谢

该项目是在 Google ML 开发者项目团队举办的 Vertex sprints 期间构建的。我们感谢获得了大量的 GCP 积分来完成这个项目。